Mac安装Apache hadoop-3.2.1教程

Java环境配置

安装Java,查看Java版本以测试是否安装成功

1 | $ java -version |

查看Java安装位置信息,之后配置Hadoop运行环境需要使用

1 | $ /usr/libexec/java_home |

ssh配置

配置ssh

1 | $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys |

创建ssh公钥

如果没有ssh公钥,执行以下命令创建

1 | $ ssh-keygen -t rsa |

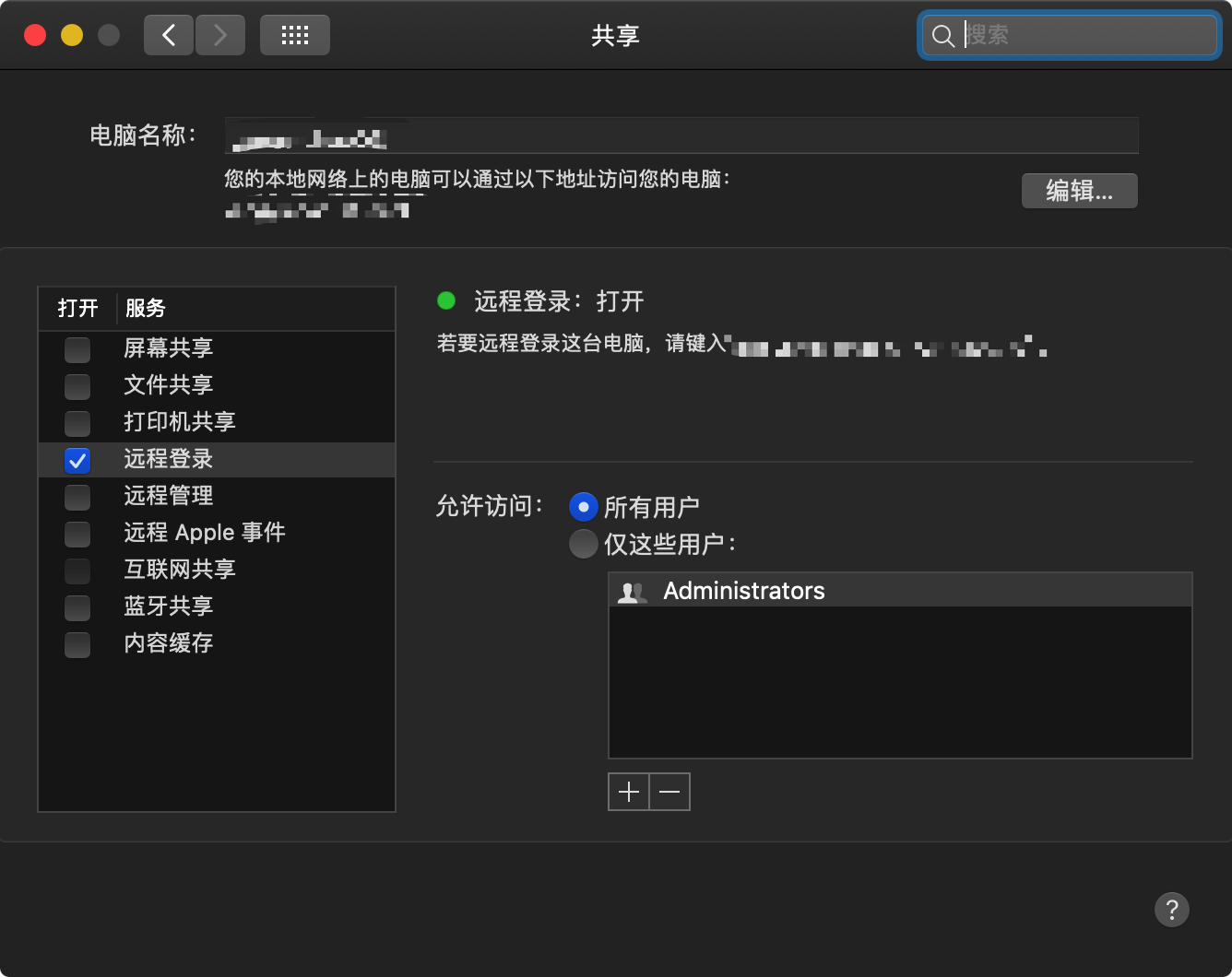

开启远程登录

测试远程登录是否开启

1 | $ ssh localhost |

安装hadoop

brew安装hadoop

brew安装的一般都是最新的hadoop,我这里是hadoop 3.2.1

如果需要安装其他版本的hadoop,通过brew安装指定版本的软件进行安装

1 | $ brew install hadoop |

注意上面的下载信息中

默认brew是会从apache官方的镜像中下载

1 | ==> Downloading https://www.apache.org/dyn/closer.cgi?path=hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz |

如果下载很慢,可以配置国内镜像进行下载(清华大学开源软件镜像站)

1 | ==> Downloading from http://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz |

安装完之后查看hadoop安装位置

1 | $ brew info hadoop |

配置hadoop

需要修改的配置文件都在/usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop这个目录下

hadoop-env.sh

配置 export JAVA_HOME

将/usr/libexec/java_home查到的 Java 路径配置进去,记得去掉注释 #。

1 | export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home |

core-site.xml

修改core-site.xml 文件参数,配置NameNode的主机名和端口号

1 | <configuration> |

hdfs-site.xml

变量dfs.replication指定了每个HDFS数据库的复制次数。 通常为3, 由于我们只有一台主机和一个伪分布式模式的DataNode,将此值修改为1

1 | <configuration> |

格式化

格式化hdfs操作只要第一次才使用,否则会造成数据全部丢失

1 | $ hdfs namenode -format |

启动服务

启动服务

1 | $ ./start-all.sh |

启动成功后,可以在

http://localhost:9870/

http://localhost:8088/cluster

进行查看

关闭服务

1 | $ ./stop-all.sh |